Robo-gun and the soldiers

1 Nov, 2011

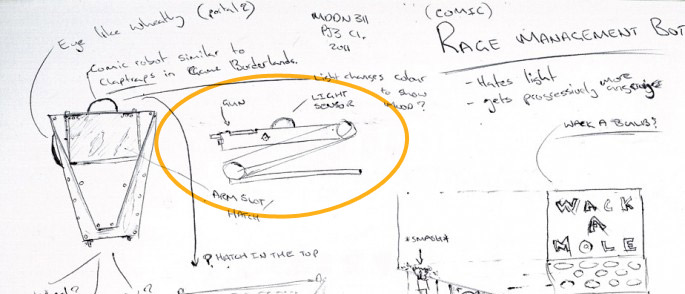

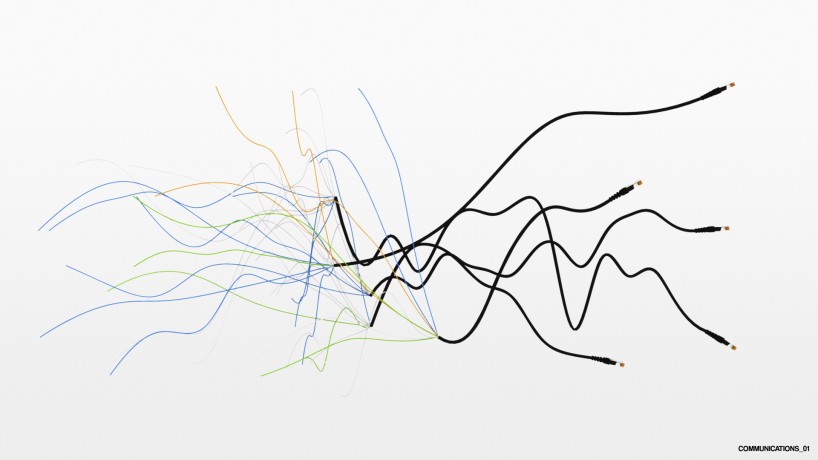

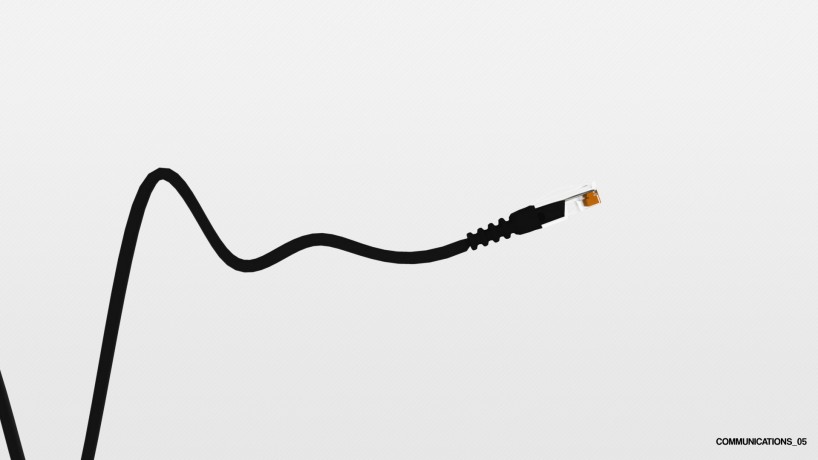

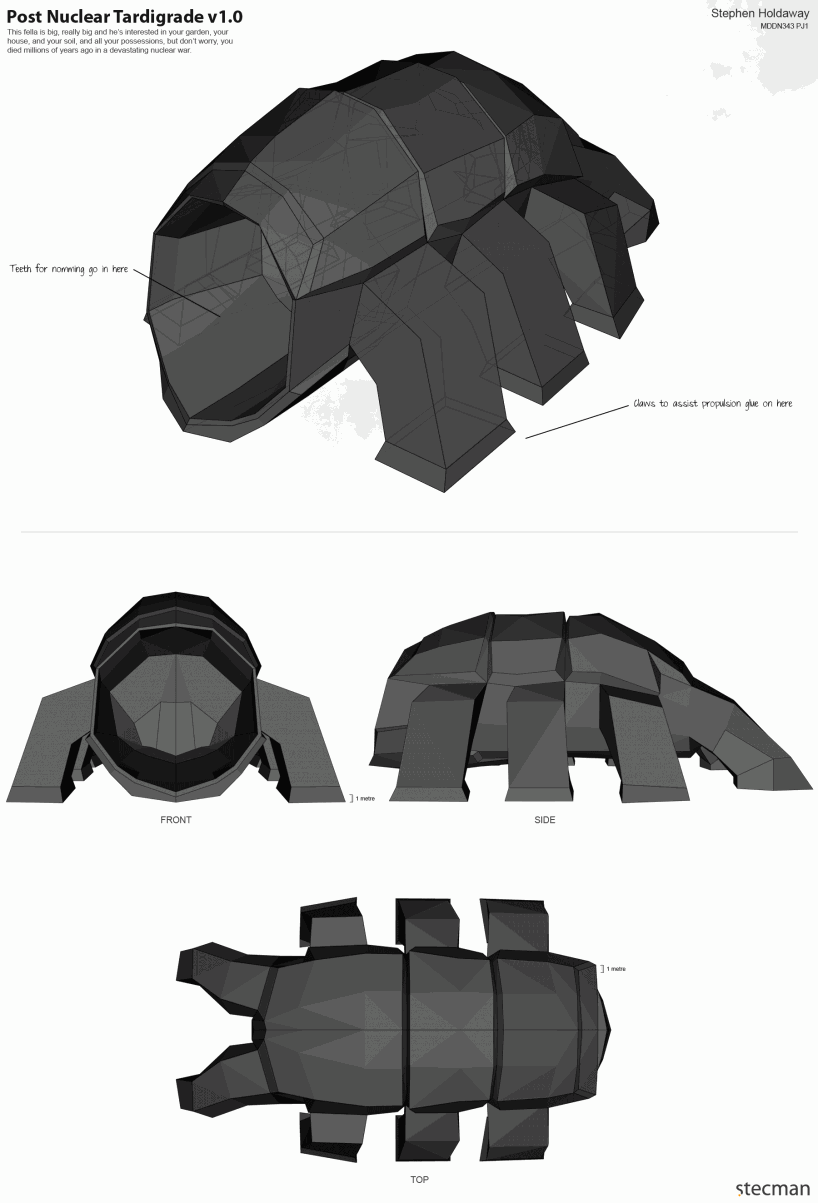

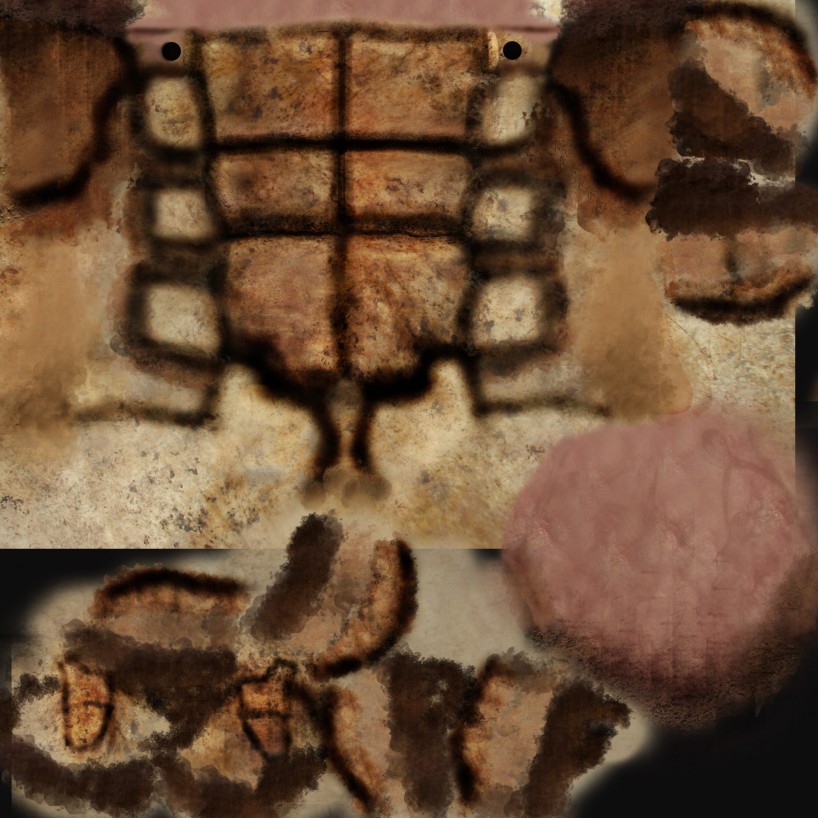

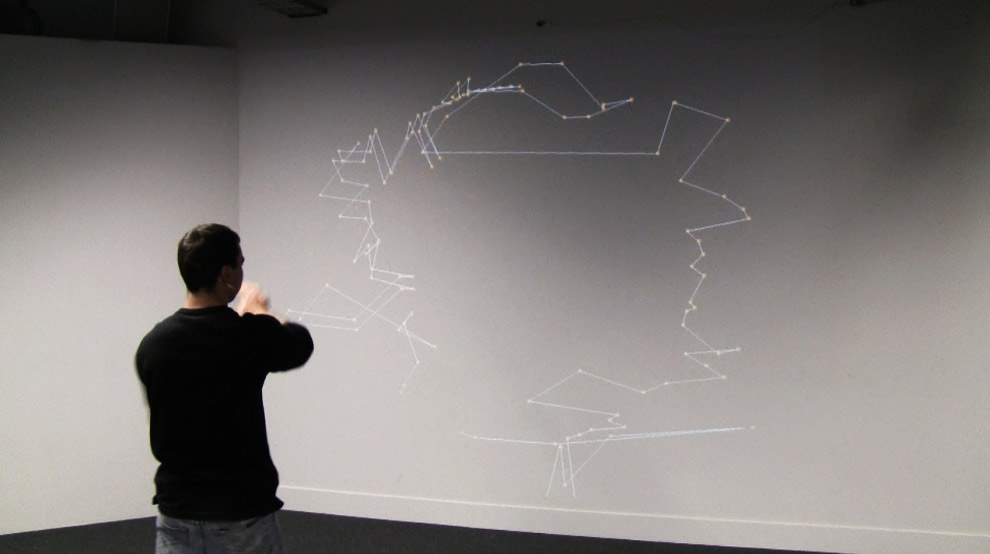

In this project you will design a non-biological based character that will navigate and react to an environment. You should focus primarily on the behavior of this “character”, but also find a distinctive and economical visual representation. You should find a visual representation that is a compelling but minimal showcase for the behavior you create.

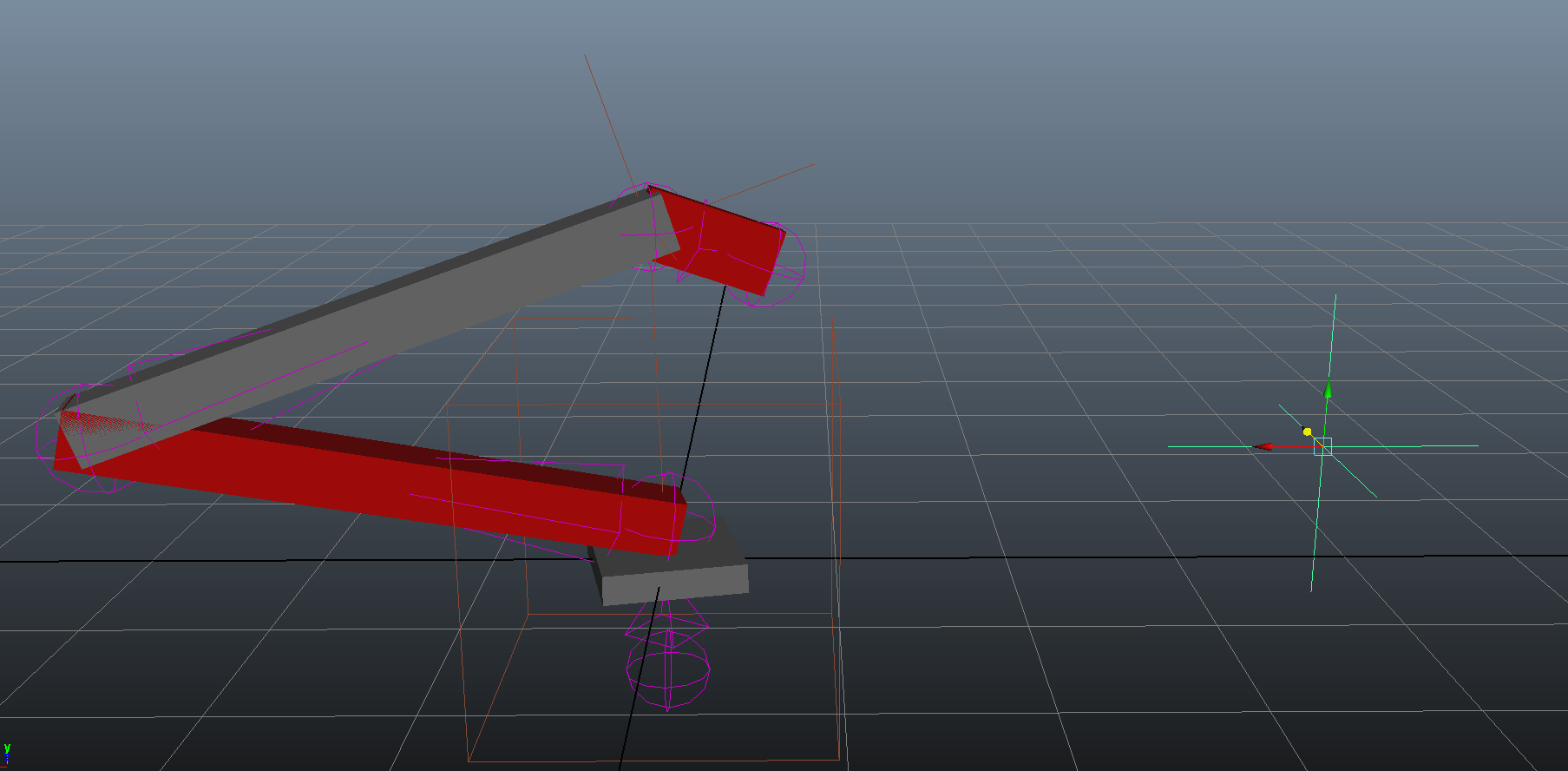

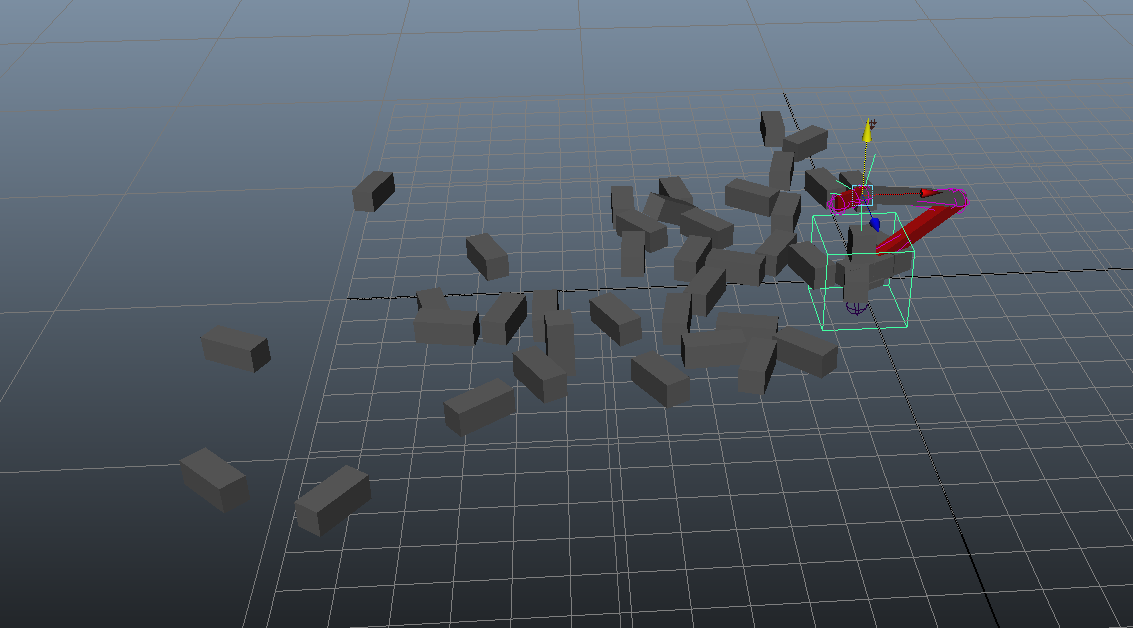

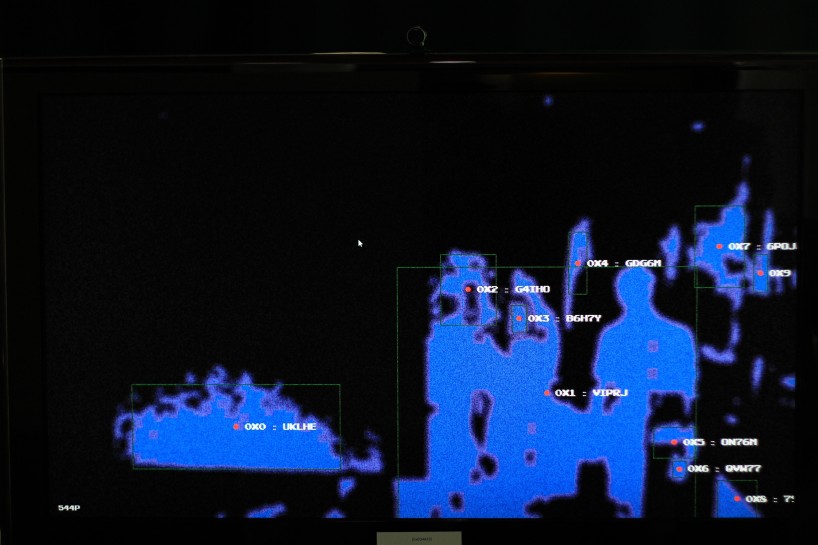

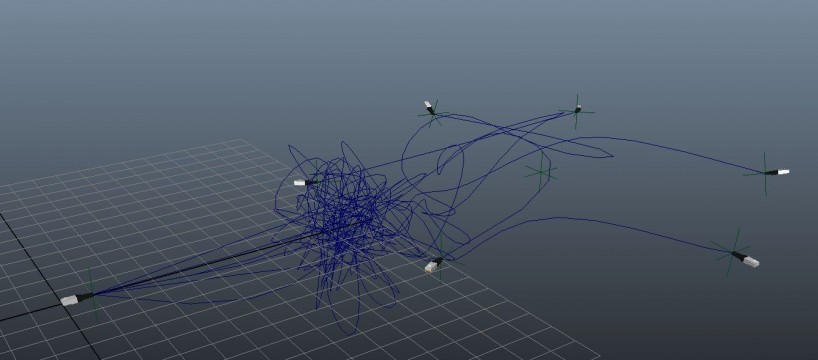

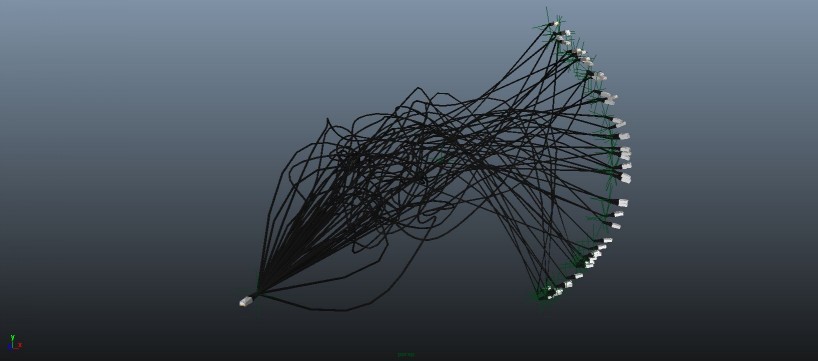

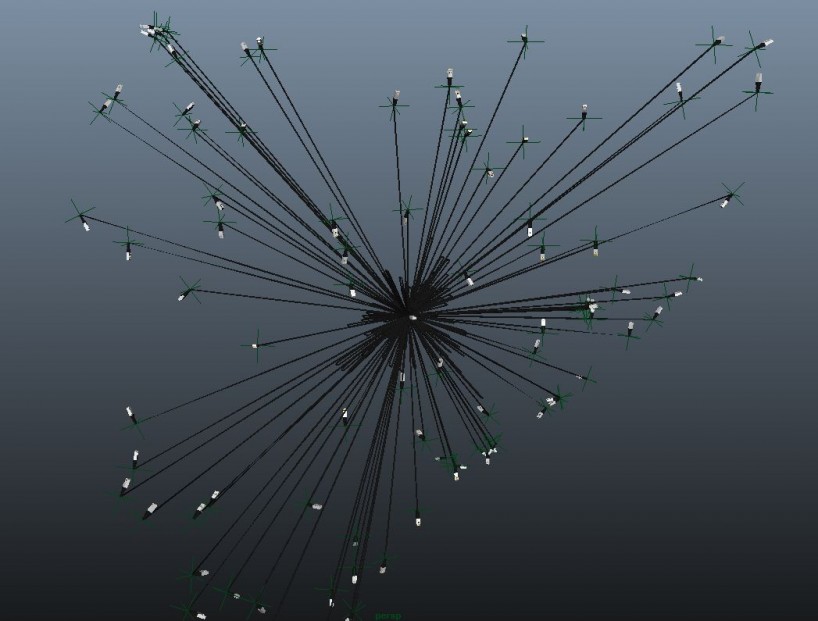

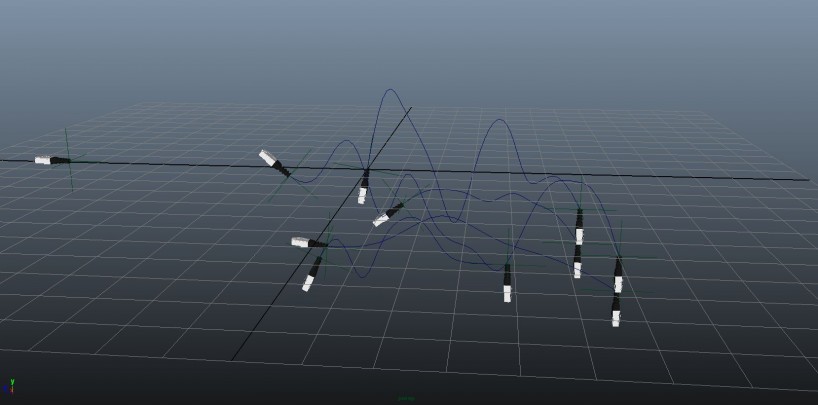

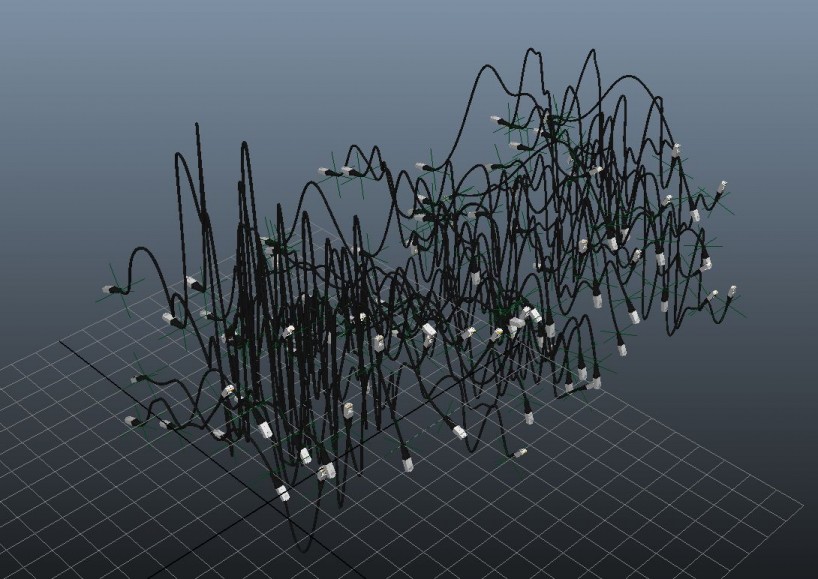

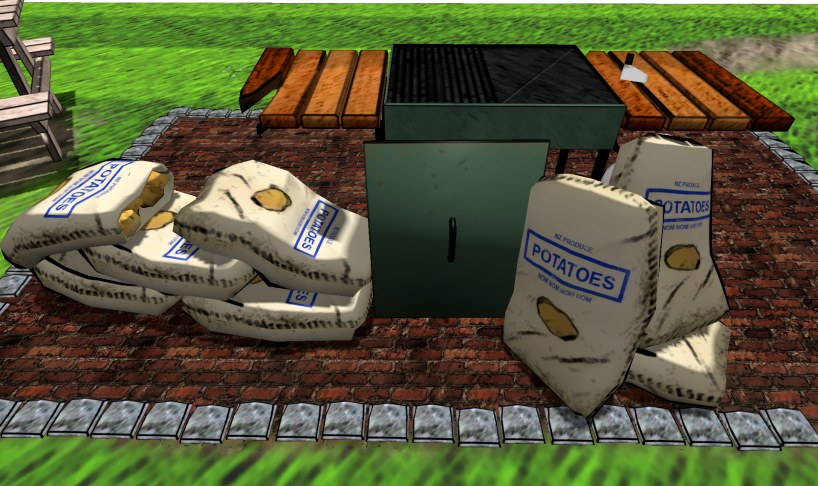

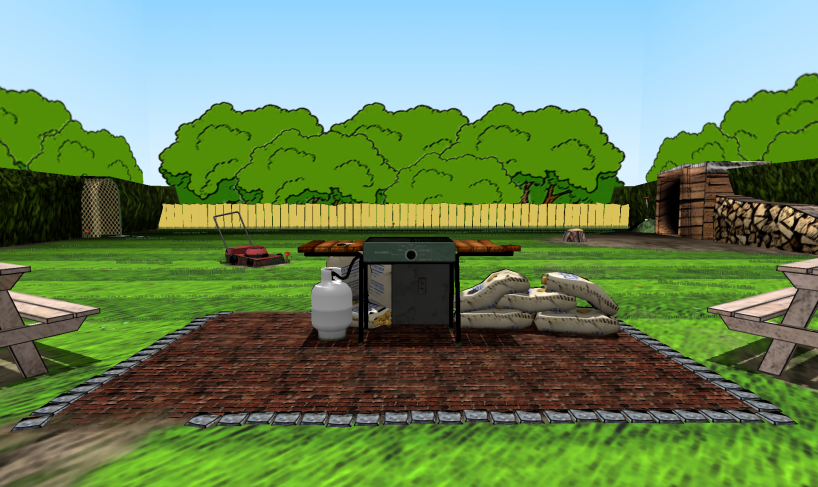

Robo-gun is intent on destroying the incoming plastic soldiers (which oddly resemble slime covered zombies) and does so with deadly precision. A python script generated and key-framed all behavior in this scene. Under the hood are some driven keys, expressions, and rigid-body simulations to simplify the aiming and animations. There’s a bit of jumpiness when the invaders are shot, since I didn’t work out how to cache rigid-body simulations/set the initial simulation frame for each soldier.

The source can be downloaded here under a Creative Commons Zero licence, and there’s a detailed write-up on this project with more images here (PDF, 1.25MB).

Development

I really enjoyed this project and had a good bit of fun. While it wasn’t required, I do wish I had spent more time on the aesthetic and render quality. The models weren’t great (though they were functional), and the render quality really suffered because of time constraints (I had to significantly lower the render settings).

What was left out

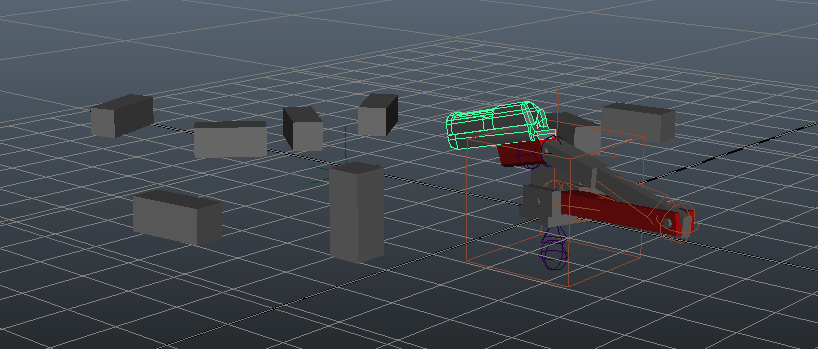

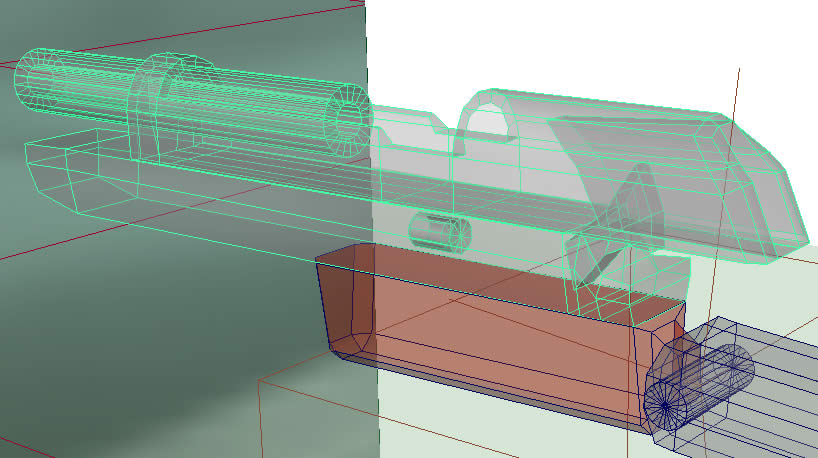

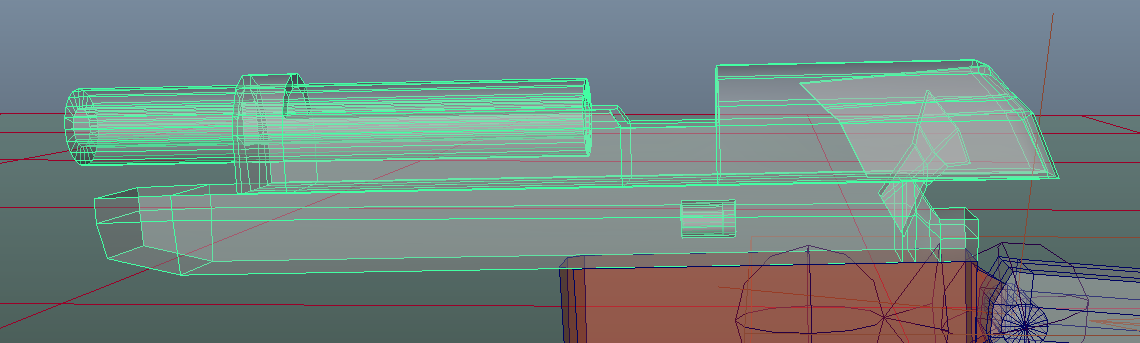

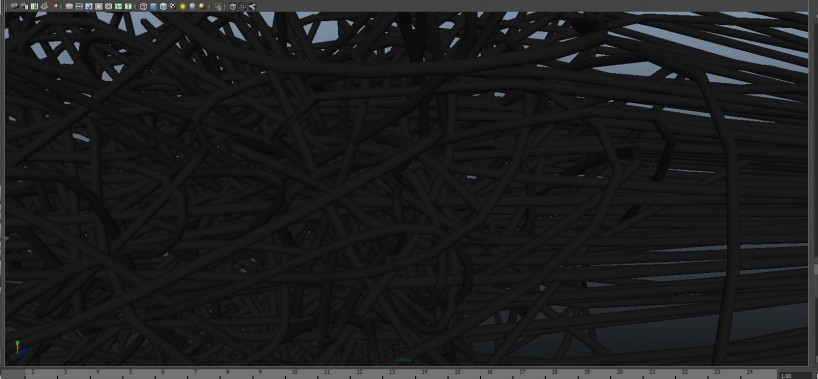

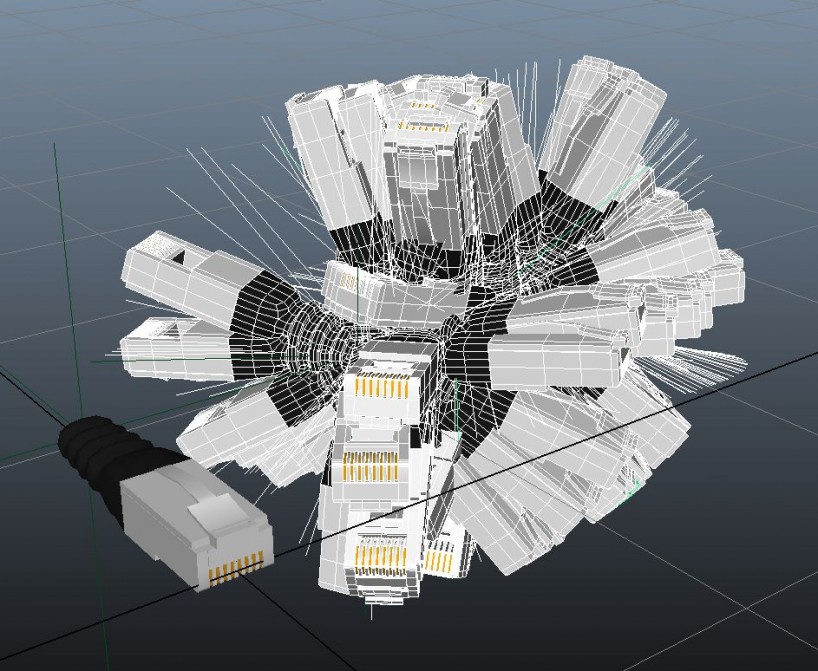

I began working on two things that ended up getting left out because of time and bugs: particle muzzle flash and cartridge ejection from the gun. I almost had cartridge ejection nailed, but it unexpectedly caused a massive bug that I couldn’t trace and blocked the generation script from running at all unless I deleted duplicates of the cartridge reference (maybe a duplicate name issue). The cartridge ejection used rigid bodies like the soldier deaths, and it looked pretty darn awesome during generation the one and only time I managed to get it to run. You can see the cartridge reference model hiding inside the gun x-ray view below.

I started experimenting with particles for muzzle flash, but ran out of time as it caused Maya to crash frequently. I didn’t get any screenshots of this, but it was pretty basic – just a directional particle emitter in the end of the barrel which would have its generation rate hooked into the custom attribute that controlled the slide and hammer action.